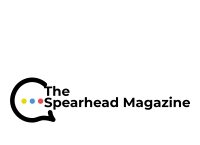

As facial recognition technology becomes commonplace in a wide array of sectors, debates have arisen about the future of privacy for citizens, both online and offline. From retail venues making personalized offers to customers to banks trying to add a layer of security to their ATMs, businesses of all sorts seem eager to implement this technology.

And it’s not just the private sector that wants to adopt facial recognition. A report by the Government Accountability Office (GAO) revealed that 20 out of 42 U.S. federal law enforcement agencies—including the FBI, the IRS, and the Capitol Police—use some sort of facial recognition technology.

The GAO’s report should worry all of us concerned with the chilling effects that the use of facial recognition in law enforcement could have on civil liberties. While facial recognition systems have sometimes been touted for their potential ability to facilitate law enforcement investigations and prosecutions, many civil rights groups such as the American Civil Liberties Union (ACLU) and National Association for the Advancement of Colored People (NAACP) have issued statements expressing concerns about their usage. These groups fear that facial recognition technology would endanger privacy and other civil liberties.

The Surveillance-Industrial Complex

On a local level, several Big Tech companies have partnered with police departments to implement facial recognition technology. For example, Microsoft developed the Domain Awareness System (DAS)—a system described as a “crime-fighting and counterterrorism tool”—in partnership with the New York Police Department (NYPD). Similarly, Amazon’s subsidiary Ring, a company known for its home security cameras, has teamed up with over 2,100 police and fire departments to provide them with real-time access to their users’ cameras.

Both Microsoft and Amazon have touted their facial recognition technologies as tools that can help the police fight crime, with Amazon claiming in 2018 that its Ring security cameras reduced crime rates by up to 55 percent in some neighborhoods. Despite these claims, investigations conducted by journalists have cast doubt on the crime rate reduction capabilities of home surveillance technology.

The backlash against the use of facial recognition systems by law enforcement has been enormous. Several major cities from Boston to San Francisco have banned cops from using facial recognition technology. A summer of racial justice protests in 2020 generated awareness of how facial recognition technology is used to racially profile and target minorities. Pretty soon after, major tech companies like Amazon, Microsoft, and IBM began to stop sharing their facial recognition systems with the police in response to pressure from pro-privacy activists.

Surveillance Revival and Its Consequences

Despite the wave of criminal justice reform in 2019 and 2020, several jurisdictions in the United States have backtracked on their anti-surveillance footage in response to the crime wave of 2021. Lawmakers in the city of New Orleans and the state of Virginia have passed bills reversing restrictions on the use of facial recognition technology by the police. Civil liberties advocates have criticized these bills for empowering cops to collect third-party records without a warrant.

Civil rights advocates have also expressed concerns about how restoring the use of facial recognition systems in law enforcement could impact women and people of color. Experts and activists have criticized facial recognition technology for being disproportionately likely to misclassify individuals with darker skin tones compared to those with lighter skin tones. Indeed, one study published by Harvard found double-digit gaps in the accuracy of facial recognition systems used by companies like Amazon, Microsoft, IBM, Face++, and Kairos when it came to identifying dark-skinned females compared to light-skinned males.

For some people, misclassification by a facial recognition system can have devastating consequences. Journalists have documented several instances when men of color were wrongfully arrested, indicted, convicted, and sent to jail for crimes they never committed—all because an AI program mistook them for someone else. For example, Detroit resident Robert Williams was wrongfully arrested in January 2020 for allegedly stealing five watches from a store after being misidentified by facial recognition technology.

When to Permit (or Forbid) Facial Recognition Technology

In general, there is a compelling case to be made against allowing the police to use facial recognition tools. There is little evidence that these tools reduce crime rates, and the threats to civil liberties and fairness in policing are too great to justify their use. The Electronic Frontier Foundation (EFF), a digital rights group based in San Francisco, advocates for restrictions on the general acquisition and use of facial recognition data by law enforcement agencies. The EFF emphasizes the importance of preventing the police from outsourcing facial recognition technology to the private sector to circumvent privacy protections for citizens.

The EFF does, however, accept some limited uses of facial detection in government and law enforcement. Ironically, one of these exceptions involves protecting people’s privacy by using facial detection technology to blur out people’s faces before disclosing government-held video and image records to the public. The EFF also tolerates the use of facial recognition tools that only pertain to government employees, including face locks used to keep intruders out of secure government buildings. The EFF states that these limited facial recognition systems do not involve the creation of faceprints to distinguish between the faces of non-government employees, meaning they do not pose as much of a threat to privacy and civil liberties compared to broad-based facial recognition systems.