On November 30, 2022, the artificial intelligence (AI) research laboratory OpenAI released a new, innovative chatbot known as ChatGPT. The chatbot allows users to enter any prompt they can think of, and in return, the chatbot will generate a comprehensive response to the prompt. People can use ChatGPT to search for information, generate articles, get ideas for upcoming events, or create entertaining stories. The endless possibilities of ChatGPT have fascinated countless internet users across the globe who have interacted with the chatbot.

However, ChatGPT has also raised concerns about the future of privacy, security, and other important issues on the internet. For example, some critics of ChatGPT fear it will undermine copyright by facilitating the plagiarism of protected works of art. Others have voiced concerns about the erosion of online privacy and data security that may result from granting one chatbot broad access to online information. Further, some experts and commentators have criticized ChatGPT for its tendency to generate wrong answers to questions that its users may ask, which is especially troubling given the chatbot’s authoritative tone.

A Revolutionary New Chatbot

ChatGPT is a chatbot that responds to prompt messages entered by the user. Using its artificial intelligence, ChatGPT generates comprehensive responses to these prompts as if it is engaging in a conversation with the user.

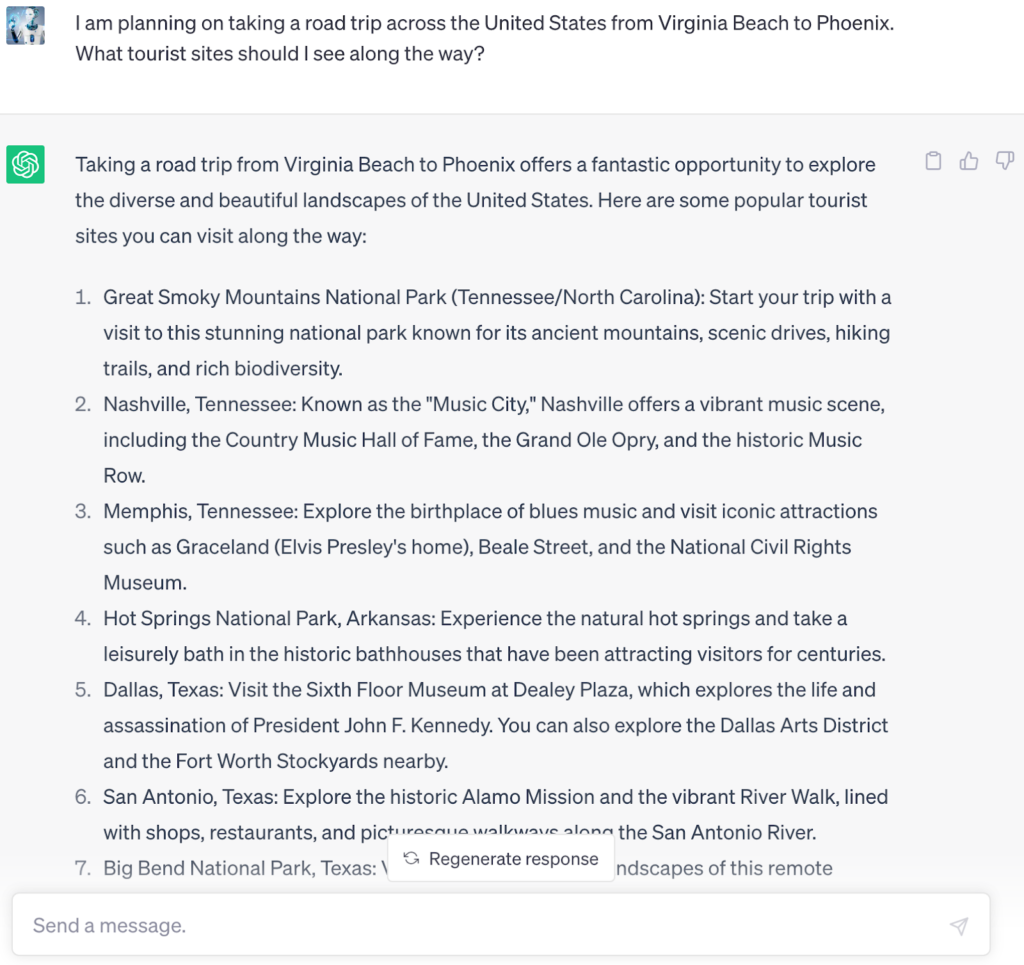

For example, after telling ChatGPT about a “road trip across the United States from Virginia Beach to Phoenix,” the chatbot can initiate a conversation with the user in which it provides a list of tourist sites (e.g., the Great Smoky Mountains, Hot Springs National Park, Big Bend National Park) for the user along with specific details about each recommendation.

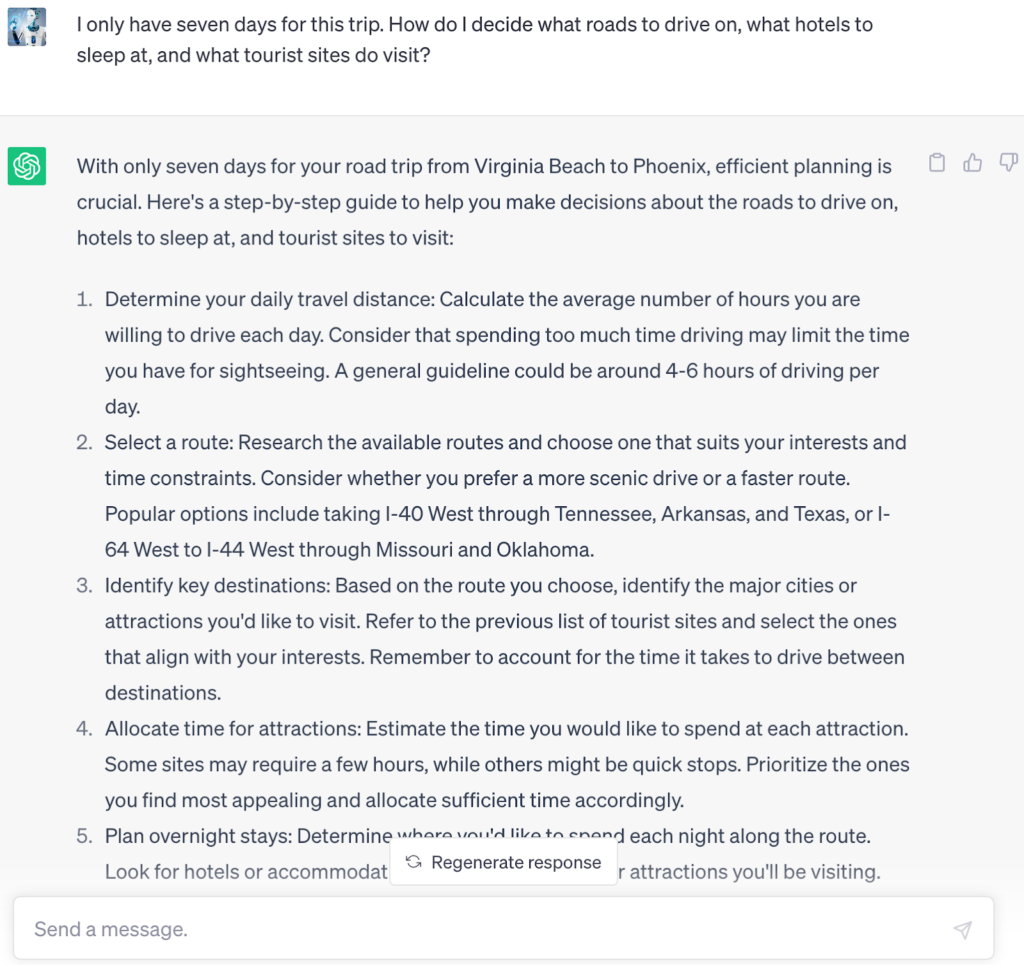

The user can then further his or her conversation with ChatGPT by specifying that he or she only has seven days to spend on “this trip.” ChatGPT remembers that “this trip” refers to the “road trip across the United States from Virginia Beach to Phoenix.” The chatbot generates a list of recommendations about the roads that the user should drive across, the tourist sites that the user should visit, and the hotels that the user should sleep at.

How Was ChatGPT Trained to Converse With Humans?

To make ChatGPT possible, software engineers first conducted “pre-training” operations by feeding large volumes of text data from internet websites and articles to the ChatGPT AI algorithm. This text data enabled the algorithm to summarize texts, translate between natural languages, finish a sentence, and perform other basic computational linguistic functions. However, the AI model still lacked the ability to seemingly converse with a user by responding to a series of user-generated prompts.

To give ChatGPT its conversational skills, the software engineers trained the ChatGPT model using a machine learning method called Reinforcement Learning from Human Feedback (RLHF). The first step was to create a supervised learning policy by feeding the AI model a dataset of sample prompts and responses to the prompts written by human AI trainers. Then, the trainers created a reward model by ranking different AI-generated responses to the same prompts based on how appropriate they would be within the context of the prompts.

Finally, the supervised policy was fine-tuned using a proximal policy optimization (PPO): The AI analyzed different prompts and provided different responses to each prompt. Every response was mathematically evaluated by the reward model, and this evaluation allowed the PPO to update the supervised policy.

The process of creating ChatGPT’s AI model was lengthy and iterative, as the software engineers often had to repeat the same steps in order to strengthen the supervised policy, the reward model, and the PPO.

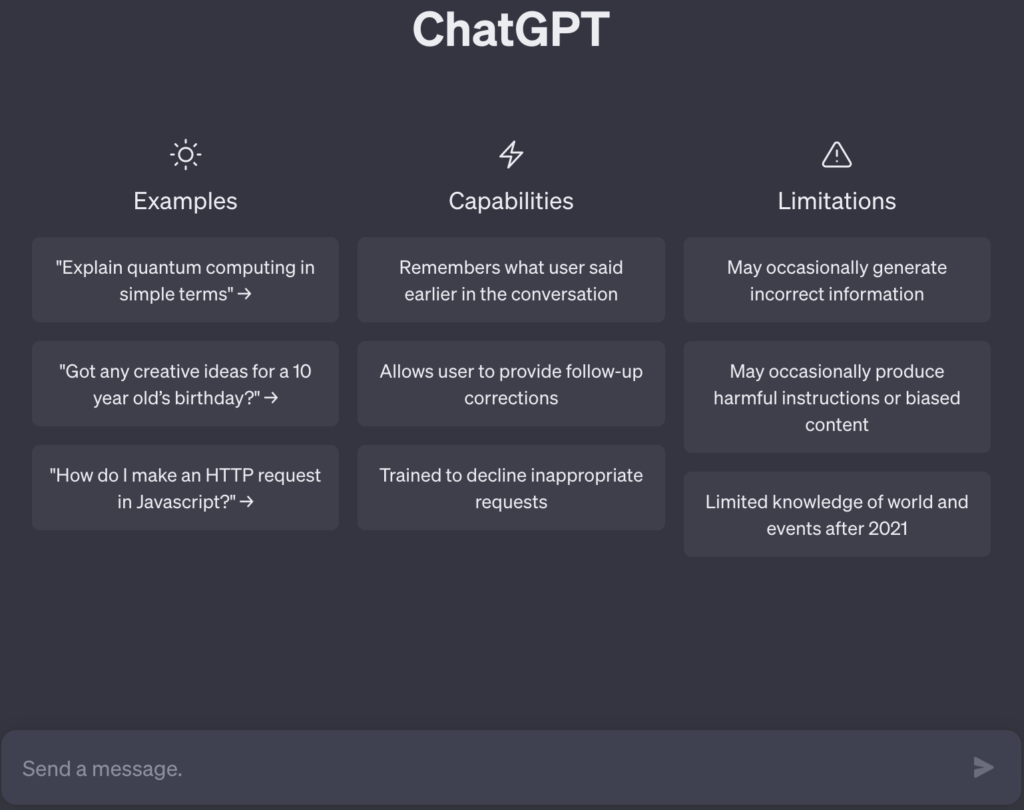

Limits to ChatGPT

Despite the many wonders of ChatGPT, there are limits to how the chatbot can converse with human beings. ChatGPT may sometimes generate false information or incorrect answers to the questions that humans ask. This may occur due to the lack of a true-false dichotomy in the reinforcement learning method that was used to train ChatGPT, and if ChatGPT were adjusted by its creators to be more cautious, it might decline to respond to questions that it can answer correctly.

ChatGPT is also sensitive to slight changes in the wording of the prompt that the user enters. The chatbot may be able to answer one version of the prompt, but even a slight tweaking of the prompt can confuse ChatGPT and prevent it from delivering a good response.

In addition, ChatGPT is known to generate excessively wordy responses, even to relatively simple prompts. This may occur because the trainers of ChatGPT preferred longer, more comprehensive responses over shorter but simpler responses, leading to biases in the dataset used to train ChatGPT.

Social, Economic, and Technological Consequences of ChatGPT

Experts have feared that ChatGPT could upend people’s lives in dramatic and often negative ways. Notably, the lack of transparency within OpenAI has caused people to question whether the data used to train ChatGPT was gathered lawfully and ethically.

For example, Italian regulators have temporarily banned ChatGPT after learning that its trainers often fed it publicly available personal data, though the chatbot came back online in Italy after OpenAI agreed to add privacy safeguards. ChatGPT has also come under fire for enabling several Samsung employees to leak personal and confidential data into the internet. These incidents have led to concerns that OpenAI may have repeatedly violated privacy and intellectual property laws in its process of training ChatGPT. Such concerns have led companies like Apple to forbid their employees from using the chatbot on company-issued devices.

ChatGPT may also be used by students to cheat on assignments and exams at school or at college. Teachers have already used ChatGPT to generate sample essays that were more sophisticated than anything their students could write. And studies conducted at educational institutions like Stanford University have suggested that some students may already use ChatGPT as an assistance tool on key exams.

The loss of jobs is another concern regarding the rise of ChatGPT. People with occupations that involve a large amount of writing—such as teachers, lawyers, journalists, and (ironically) software developers—may be displaced from the workforce if ChatGPT is used to write their assignments, legal documents, news articles, or computer programs for them.

In addition, some hackers have used the software architecture of ChatGPT to create their own versions of the chatbot that are designed to generate malware and phishing emails. While OpenAI does not allow ChatGPT to produce harmful content, some individuals have been able to circumvent these restrictions, and new versions of the chatbot can generate messages that may lead to real-world harm if they are used to produce ransomware or Trojan horses.

Despite these risks associated with ChatGPT, people can work together to hold OpenAI accountable for any real-world harm that its chatbot may cause. Privacy activists may demand stricter measures to prevent data and security breaches within ChatGPT. Individuals should also ask OpenAI to make modifications to ChatGPT that would prevent the chatbot from generating wrong answers and false information. As more people begin to use ChatGPT, it is important that people learn how to utilize its many features in a safe and effective way.